Proctored vs In-Person Exams

I recently sat the AWS Developer Associate Exam and this time I thought I’d try a proctored exam with the folks at PSI exams. In the post-pandemic world, doing as much as we can remotely is the wise thing to…

AWS Developer Associate Certification Cheat Sheet

I’ve just taken and passed the AWS Certified Developer Associate Exam. While I’m basking in the after certification glow I thought I would tidy up and publish my exam notes as a cheat sheet. It’s a pretty long set of…

Is Udemy or Pluralsight the Best for AWS Exams?

I’ve taken quite a few professional exams over the years. Currently I’m studying for the AWS Developer Associate Exam. This time I’ve used both Pluralsight and Udemy to study for it. So, is Udemy or Pluralsight the best for this…

Reliable console.log output for JavaScript ES6 Map and Set

Problem Here’s something that has tripped me up a few times now – when you console.log out the contents of a Set or Map in ES6 the it looks empty which isn’t true. Example Output The console tells me it…

Better Async Calls in the useEffect Hook

The useEffect hook allows you to perform side effects on your React page. A common use case would be to perform an API call to populate the page when the page has mounted i.e. The Problem The callApi method is…

Working with Dynamic Images in React Native

I’ve been recently developing mobile apps using React Native and TypeScript and here’s an odd thing – it’s not actually possible to use dynamic images within React Native. The Problem with Dynamic Images I’ve coded an Android app that displays…

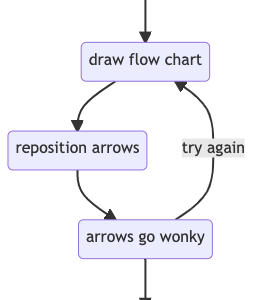

Amazing Markdown Diagrams with Mermaid

Unusually for a software developer, I quite like writing documentation. What I’ve never liked is drawing diagrams. I’ve never found a drawing package that I like, and I find dragging round shapes and linking them with fiddly little arrows a…

Npm link set up advice and troubleshooting

I’ve been fighting against npm link recently and I’ve a funny feeling I’m not the only one. I don’t find the documentation particularly useful, the way it works seems opaque bordering on mystical and the cli output is in cryptic…

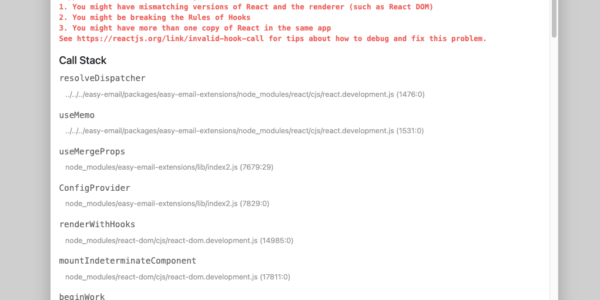

Invalid hook call error in Next.js

A quick one. I keep getting this intermittent error when developing React applications using Next.JS – particularly when changing branches but it happens at other times too. Unhandled Runtime ErrorError: Invalid hook call. Hooks can only be called inside of…

Npm Package for Realistic Random Letter Generation

Moving on from my recent post about a realistic way to generate random letters – I’ve now published the work as an npm package. It’s largely an exercise in publishing npm packages but it I still like the utility and…